- On Student Success

- Posts

- Analytics Without Action, Dashboards Without Decisions

Analytics Without Action, Dashboards Without Decisions

Moving the needle on student success

Was this forwarded to you by a friend? Sign up for the On Student Success newsletter and get your own copy of the news that matters sent to your inbox every week. If you are a subscriber, please forward this to others who may be interested.

Over the course of many years of giving clients advice about student success initiatives, the single biggest pattern I see is this: institutions identify a problem, procure a tool (early-alert, CRM, dashboard), implement it, and then stop. Once the system is live, follow-through stalls. Yet leaders still wonder why retention, completion, and satisfaction don’t improve.

The missing link is post-implementation action: turning insights into interventions which, in turmn, yield results. These actions could potentially take many forms depending on the insight provided by the student success system implemented: increasing advising staff, new programs, curriculum redesign or new training for student success staff. No matter what it is, it doesn’t happen. And the student success problems remain, but this time with a fancy new system or dashboard. The inaction puzzles me, and, to be fair, it often puzzles my clients. No one has convincingly explained why purchasing a system or collecting data isn’t followed by a program designed to address what the data reveals.

Over the next year this is one of the themes I want to explore in this newsletter. Today I’m laying out a few hypotheses for why so often system procurement and insights are so seldom followed by interventions to improve student success happens so often. Over the coming year, I’ll test them.

To be clear, I’m not talking about campuses doing nothing. I’m focused on places that have declared student success a priority and invested in systems, yet see little movement because insight doesn’t translate into action or interventions or programs. I’m also not claiming technology is the only lever. It isn’t. But many institutions buy these tools and still don’t see results. Those are the cases I want to understand.

The nature of of the problem

Student success is a hair-on-fire emergency in U.S. higher ed. In 2023, full-time retention was 81.7% at four-year institutions and 65.1% at two-year colleges. The six-year graduation rate hovers around 65%, there’s a depressingly long list of campuses with rates in the low double digits, and more than 43 million Americans have some college but no degree.

Given the severity, the stakes, and how long many institutions have been working on this, often by implementing student-success tools, we should be seeing more progress.

There are bright spots: Georgia State, the University of South Florida, and Lorain County Community College for example, among others, have posted notable gains. And there are signs the field is prioritizing this more broadly. For the first time this year, I’ve seen many start-of-term announcements that typically tout enrollment milestones also highlight retention. Southern Illinois University Carbondale, for example, reported essentially flat enrollment but emphasized meaningful improvements in retention.

overall enrollment on the 10th day of class for fall 2025 remains steady (flat) at 11,785 – only five fewer students than last year – which saw a record percentage enrollment growth. [snip] The success of the university’s enrollment stability was also greatly enhanced by the strategic efforts of Academic Affairs, yielding a 5% growth in retaining continuing students – from 7,532 to 7,898.

Still, the fact that outcomes remain so poor, even as many institutions “take action,” and we keep pointing to the same exemplars (e.g., Georgia State and, don’t get me wrong, what they have done is awesome) suggests something is amiss. Student success is a wicked problem with many causes and no single fix. Even so, a core reason progress lags is the lack of follow-through after tools are implemented: systems get installed, dashboards light up, and then little changes.

What can we call this inaction

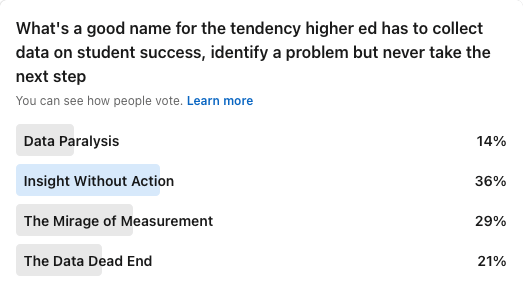

Maybe part of the problem is that we don’t have a pithy name for it. A few months ago, I ran a LinkedIn poll to crowdsource one, here’s how the votes shook out.

But an even more perplexing problem is why this inaction keeps happening. I don’t have answers yet, but these are the hypotheses I will be exploring in this newsletter over the next year or so.

The no strategy one-step

One strong reason we see so little progress, despite lots of activity, is that the purchase or development of a student-success-focused tool isn’t embedded in a broader student success strategy. That stands to reason: without a strategy, you haven’t clearly defined the problem or the destination. Lacking that, institutions don’t know which follow-up actions would actually address the issue, nor what staffing and resources are required to reach the goals.

Back in 2016–2019, I spoke with many institutions that had bought a learning-analytics system from a well-known vendor. All but one failed to realize the value they expected. The tool was capable of generating useful insights, but those insights rarely led to programs that addressed the underlying issues, largely because the purchase was a one-off, disconnected from any larger effort.

That said, “no strategy” can become a catch-all that doesn’t move the conversation forward. We need additional, more specific hypotheses to explain why this keeps happening and how to break the pattern.

There is an app for that

Techno-solutionism, or the belief that every problem can and should be solved with technology, is rife in EdTech and student success. You see it in the reflex to start with tools instead of goals. A few years ago, after a talk I gave on centering the student experience (when that wasn’t yet common in the US), a campus leader rushed up, eager to improve the student experience on his campus. His first question wasn’t how to map gaps or define outcomes. It was, “Which CRM should I buy?”

That tool-first instinct is especially insidious in student success because it smuggles in a second assumption: that the tool is enough. Institutions act as if a system, or even an ecosystem, will solve the problem by itself. I call this the air-conditioner view of student success: the room is hot and humid, so just install an AC unit and—presto—the climate is fixed.

To be fair, vendors often encourage this, implying that “buy X and Y will be solved.” Remember the learning-analytics system described above? Many clients expected it to deliver actionable insights that would, on its own, improve student success. But data is the start of the journey, not the finish. At best, good data shows how big the problem is and where to look; it doesn’t interpret itself, and it certainly doesn’t fix anything.

Worse, because the system was sold as a stand-alone fix, many institutions under-resourced the work. They didn’t staff it adequately, so they weren’t even getting high-quality data to begin with. The result was predictable: lots of dashboards, not many decisions.

Bedazzled by data

There’s also a sub-variant of techno-solutionism: an excessive fixation on data. In student success, collecting, storing, and displaying metrics often becomes an end in itself, instead of a means to drive decisions and interventions that actually help students. Needless to say this does not move the needle.

Silos and stakeholders

Another reason insights from student success tools rarely lead to action is who isn’t at the table. Procurement and implementation decisions are often made without a broad enough group of stakeholders to carry the work forward. Because student success spans many touch points, a go-it-alone approach all but guarantees the teams you’ll later depend on won’t have buy-in. That’s why so many tools underperform: a dashboard gets built, but it doesn’t meet key users’ needs, so it goes unused. This happens time and time again with early alert systems, for example.

I once spoke with a client considering an early-alert system; when I asked how advising staff felt about it, they admitted they hadn’t yet asked or consulted them. I noted that I typically advised clients that most early-alert systems fail to reliably improve outcomes like retention. In their case, without advising at the table, that result was almost certain. Stakeholder engagement is always important in technology roll outs; in student success, it’s absolutely critical.

The project completed mentality

A fifth reason there’s so little follow-up on insights from student-success tools is the “done-at-deployment” mindset. Projects are often scoped narrowly—implement the early-alert system—and once the tool goes live, it’s declared a success. Whether that stems from weak project management, design gaps, or understandable defensiveness, the result is the same: no plan for iteration, review, or outcomes monitoring. Even when the tool under-performs, no one revisits assumptions or asks why, so the post-implementation actions that would surface and fix the gaps never happen.

File under “other”

Other factors that likely contribute to weak follow-up include:

Fear of what the data will reveal—e.g., equity gaps or ineffective practices.

Fear of failure if follow-on actions are tried and results are public.

Chronic budget and staffing constraints that leave no capacity to act.

Preference for boutique pilots over scale, generating activity without systemic change.

Privacy and compliance anxiety that chills the use of data to drive improvement.

Parting thoughts

If we are to move the needle on student success, we need to call a halt to this tendency not to take action, whatever the reason behind it. We do need to understand why it keeps happening. In laying out my hypotheses I hope we have more clarity on the issue and can start the hard work that will be involved in fixing the problem. Over the next year, in many of my posts I will be exploring these in more detail.

That said, a pithy term would still come in handy. Lets have another go at voting.

Help me identify a good term to describe the tendency in higher education not to focus on follow-on actions after problems have been identified in newly implemented student success technologies |

The main On Student Success newsletter is free to share in part or in whole. All we ask is attribution.

Thanks for being a subscriber.