- On Student Success

- Posts

- This Week in Student Success

This Week in Student Success

Data, dreams, and disillusionment

Was this forwarded to you by a friend? Sign up for the On Student Success newsletter and get your own copy of the news that matters sent to your inbox every week.

I am at home alone this weekend and it is a little chilly and so I am making stock. And tomato jam, and hot sauce and chilli oil. But what was new in student success this week?

Democratizing data, or just disguising it?

Over at Educating AI, Nick Potkalitsky has a post about new student-success tools and LMS features aimed at democratizing data analysis. He’s writing about K–12, but the issue matters in higher education as well. More student-success platforms in higher ed now offer easier access to data visualizations and let users pose natural-language questions to their data.

Nick describes a similar push in K–12 and recounts an interaction between a school administrator and a vendor.

This fall, the major ed-tech players are making a big push to get their administrative analytics tools into districts for the 25-26 school year. The pitch is compelling: upload your student data (achievement scores, attendance records, behavior logs) and get instant insights about who’s at risk, what interventions to try, and where to focus your limited resources.

One superintendent told me she sat through a demo where the AI tool produced, in under thirty seconds, a beautifully written report identifying at-risk students, complete with recommended interventions and confident language about “engagement trajectories” and “behavioral patterns.” It would have taken her team days to compile something similar.

She had one question: “How did it know all that?”

The vendor smiled. “Machine learning. The AI analyzes everything.”

She pressed: “But how? What did it actually look at?”

The smile got tighter. “It’s a neural network trained on millions of data points. It identifies patterns humans would miss.”

While this quote may reflect as much an issue with a likely new and under trained account executive as much as anything to do with AI, Nick’s argument, that generative AI models don’t “analyze” data in a statistical or causal sense but instead generate text that mimics the genre of analysis, is a useful caveat. But two additional points are worth making.

First, we shouldn’t conflate all AI with generative AI, and I worry Nick slips into that trap. Many student success tools use other forms of AI to analyze data and may be adding generative AI as a self-service layer on top—to help generate queries or visualizations. That is not the same as simply asking ChatGPT, “Who is at risk?”

Second, Nick claims these new tools lack transparency.

The AI will generate responses to these questions. But those responses are themselves predictions of what explanations should sound like, not traceable logic. You’re not getting closer to understanding the actual reasoning. You’re getting increasingly sophisticated descriptions that maintain the appearance of analysis.

He contrasts this lack of transparency with more traditional systems.

For decades, districts have used analytical tools. When you ran a report in your Student Information System or built a pivot table in Excel, the process was straightforward:

* You decided what to measure (attendance below 80%, GPA drop over 0.5 points)

* The system counted and calculated based on your rules

* You got results you could verify (show me the 23 students who meet these criteria)

* You could trace every decision (why is this student flagged? Because they missed 15 out of 60 days)

It was limited, maybe too limited. A simple threshold might miss students who are struggling in ways that don’t show up in attendance percentages. But it was transparent. You knew exactly what you were measuring and why.

That transparency may have existed when people were hand-rolling simple queries in Excel, but today’s student-retention and early-alert systems—used by thousands of higher education institutions—are far from transparent. They often rely on opaque data models and algorithms, operating as essentially black-box systems.

Even so, his point stands. As we explore new ways to use AI to democratize data—and to free us from waiting on data analysts or relying solely on standard reports—we should be clear about what’s happening under the hood.

The speed of beer

Part of why I started this newsletter is that I firmly believe sharing knowledge is a prerequisite for advancing student success. This week I read Breakneck: China’s Quest to Engineer the Future by Dan Wang. I highly recommend it, even though it doesn’t cover education or China’s presence in Africa as much as I’d have liked.

I did, however, love this quote.

When I worked in Silicon Valley, people liked to say that knowledge travels at the speed of beer. Engineers like to talk to each other to solve technical problems, which is how knowledge diffuses.

This is why in-person conferences matter, and why we need to spend more time talking (and drinking, both alcoholic and non-alcoholic beverages) together.

Cool research

At The Learning Dispatch this week, Carl Hendrick rounded up a passel of compelling studies on learning with clear relevance for student success. The list is long, and fascinating, but I want to highlight two in particular.

Sleep disorders

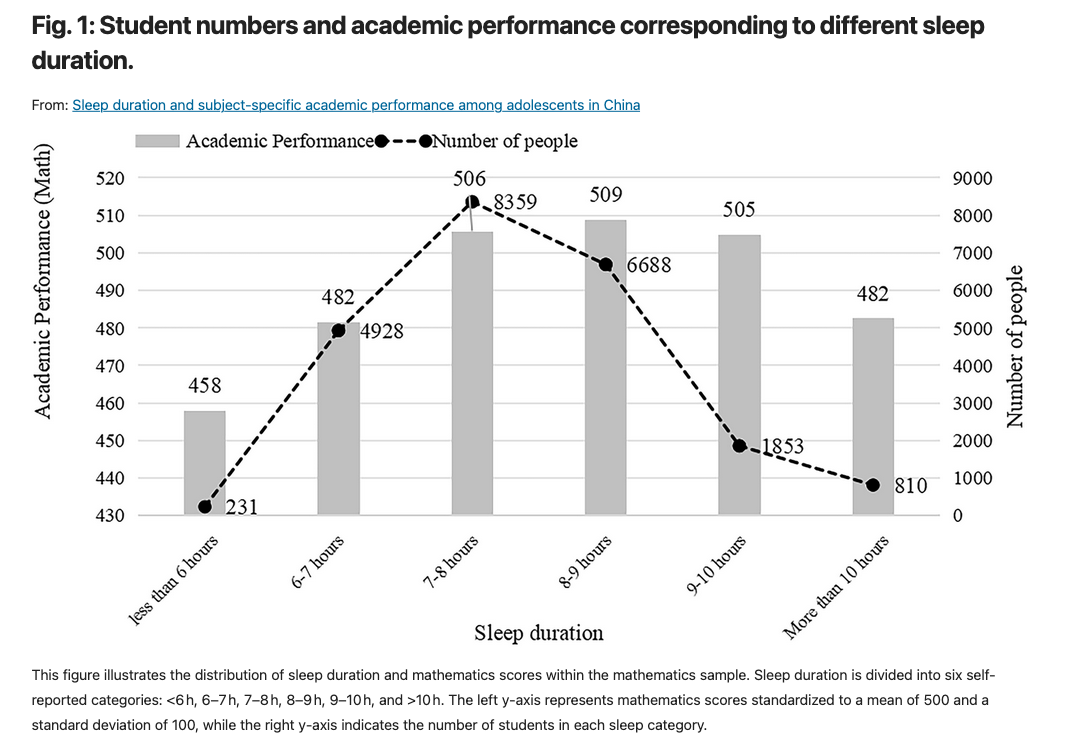

A team from the Shanghai Teacher Institute and Tongji University’s Institute of Higher Education conducted a study on how sleep duration correlates with academic performance across five subjects in a K–12 setting. They analyzed self-reported sleep duration alongside standardized test scores for 54,102 eighth-grade students across 717 middle schools in Shanghai.

Their key finding: an inverted U-shaped relationship between sleep and achievement, with optimal performance at around eight hours of sleep per night.

Sleep duration had a larger impact on mathematics and science scores than on language or arts, and lower-achieving and women students benefited more from sufficient sleep. Unsurprisingly, homework load and electronic device use were the biggest factors reducing sleep.

I’m certainly not going to fault the study for sample size. I do worry about the self-reported sleep measure and about unobserved variables, such as employment or child/dependent care, that could both reduce sleep and depress scores.

That said, even though this is a K–12 study, the implications for higher education are obvious. Many of our students are still adolescents—or close to it—and insufficient sleep likely affects performance, especially for those away from home and a structured environment for the first time.

We kind of knew this all along

But it is good to get additional empirical evidence of it.

A mixed-method secondary analysis of student evaluations of teaching (SETs) found that, beyond their well-documented biases, SETs do not reflect genuine learning and can distort incentives. In settings where a minimum SET score was required for instructor evaluation, grades tended to rise.

Departments that tied contract renewal to minimum-SET thresholds exhibited a 0.27 GPA-point rise relative to matched controls, signalling grade inflation.

An important aspect of my current editorial policy

Money cant buy you love (or retention, apparently)

Ignoring my own advice.

In an absolute firecracker of an article, Josh Moody at Inside Higher Ed explores what has happened at New College of Florida in the two years since Governor Ron DeSantis fundamentally changed the institution’s leadership and direction.

From a student success perspective, one thing stands out: retention and graduation rates have declined over the past two years, even as spending has skyrocketed.

New College spends more than 10 times per student what the other 11 members of the State University System spend, on average. While one estimate last year put the annual cost per student at about $10,000 per member institution, New College is an outlier, with a head count under 900 and a $118.5 million budget, which adds up to roughly $134,000 per student.

Josh noted that both retention and graduation have declined since 2023 but didn’t provide data to support that, and recent (i.e., non-IPEDS) numbers are hard to find and, when found, difficult to parse.

According to a news report from May of this year, some faculty have also complained about a lack of transparency around enrollment and retention data.

Faculty and staff at New College have been complaining about a lack of transparency by their leadership when it comes to enrollment and retention numbers. At a special board meeting on Wednesday, the new provost presented to the trustees the accountability report the honors college will provide to the state panel that oversees public universities. More than two years after the takeover by the governor, it admits that New College of Florida has a retention problem.

[snip]

The current graduation rate is at 40% – an all-time low – but administrators promise that figure will jump to 60% for the class that enrolled last year – which would be an all-time high.

According to the same news report, 23% of New College students are international. The issue surfaced after the student representative on the Board of Trustees expressed concern that support services for international students were inadequate.

Student Trustee Kyla Baldonado followed a line of questioning about the college’s intention to recruit more out-of-state and international students to grow. Responding to her questioning, Corcoran [the President of New College] said that already, 23% of New College students are from abroad, more than the 20% the state recommends. Baldonado expressed concern about the lack of specific services for international students on the small Sarasota campus.

Many of the new international students on campus are athletes.

Musical coda

The top 80 greatest guitar intros. He doesn’t quite nail a couple of them, but it’s extraordinary. I’m not sure I could name 80 songs without a list. H/T kottke.

On Student Success newsletter is free to share in part or in whole. Please share widely. All we ask is attribution.

Thanks for being a subscriber.